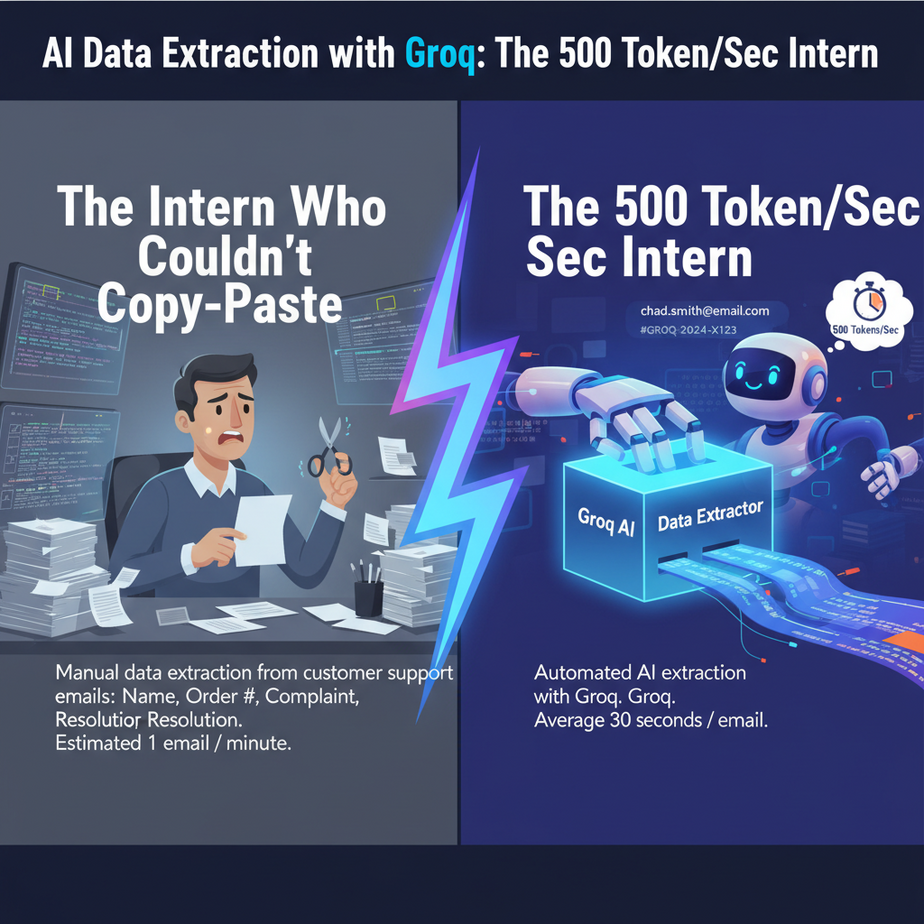

The Intern Who Couldn’t Copy-Paste

Let’s talk about Chad. We hired Chad as an intern. His one job was simple: take customer support emails, find the important bits—name, order number, complaint—and paste them into our support software. A task a reasonably intelligent hamster could perform.

Chad was not a reasonably intelligent hamster.

He’d get names wrong. He’d paste order numbers into the “customer mood” field. He’d summarize a 300-word complaint about a broken gizmo as “customer sad.” He was slow, expensive (he drank all the good kombucha), and the data he produced was a toxic waste dump.

We fired Chad. Well, we “promoted him to customer.” Then we built his replacement: an AI automation that does the same job, perfectly, in less than a tenth of a second, for fractions of a penny.

Today, you’re going to build that same robot. Welcome to the Academy.

Why This Matters

Every business on earth runs on data. But most of that data starts as messy, “unstructured” text: emails, reviews, transcripts, contact forms, support tickets. Getting that chaos into a clean, structured format (like a spreadsheet or a CRM record) is a soul-crushing bottleneck.

This is the work we give to interns, virtual assistants, or—worst of all—ourselves. It’s slow, error-prone, and impossible to scale. Want to process 10,000 documents? Hire 10 more Chads. Good luck with that.

This automation replaces that entire manual workflow. It’s a specialized robot that reads, understands, and perfectly formats information at a speed that feels like science fiction. This isn’t about saving a few minutes; it’s about building systems that can handle infinite inputs without crumbling. It’s about turning your messiest data streams into your most valuable assets.

What This Tool / Workflow Actually Is

We’re using two key concepts here:

- Groq (The Engine): Groq isn’t a new AI model like ChatGPT or Llama. Think of it as a specialized race car engine for AI. It takes existing, powerful open-source models (we’ll use Llama 3) and runs them on custom hardware called LPUs (Language Processing Units). The result? Absurd speed. We’re talking 300-800 tokens per second. For our task, that means near-instant results.

- Structured Data Extraction (The Job): This is the process of teaching an AI to act like a meticulous data entry clerk. We give it a blob of text and a template (a “schema”). The AI reads the text and fills in our template, returning it as a perfectly formatted JSON object. JSON is just a clean, predictable way to organize data that nearly every application on the planet can understand.

What this is NOT: This is not a creative writer or a brainstorming partner. We are using a powerful, creative AI for a very boring, repetitive, and precise job—which is where the real business value is.

Prerequisites

This is easier than it sounds. If you can copy and paste, you can do this. I’m serious.

- A Groq Account: Go to console.groq.com and sign up. It’s free, and they give you a generous amount of credits to start. You’ll need to create an API key. Just click “API Keys” and “Create API Key.” Copy it and save it somewhere safe.

- Python 3: Most computers already have it. If not, a quick Google search for “install python” will get you there. Don’t panic. You will write maybe five lines of actual code.

- The Groq Python Library: Open your computer’s terminal (or Command Prompt on Windows) and type this one command:

pip install groqThat’s it. You’re ready. No complex setup, no 20-hour course on software development.

Step-by-Step Tutorial

Let’s build our Chad-replacement robot, piece by piece.

Step 1: Set Up Your Python File

Create a new file called extractor.py. The first few lines are just setup—telling Python we want to use the Groq library and loading our secret API key.

It’s best practice to set your API key as an environment variable, but for this lesson, we’ll just paste it in. Replace "YOUR_GROQ_API_KEY" with the key you copied.

import os

from groq import Groq

client = Groq(

api_key="YOUR_GROQ_API_KEY",

)

Step 2: Define Your Unstructured Text

This is the messy data you want to process. Let’s use a sample customer support email.

unstructured_text = """

Hi support team,

I'm writing because my recent order, #G123-89B, hasn't shipped yet.

I ordered the 'Quantum Widget Pro' on May 15th, 2024.

I'm getting pretty frustrated because I needed it for a project this week.

Thanks,

Jane Doe

jane.doe@example.com

"""

Step 3: Craft The “Magic Prompt”

This is the most important part. We need to give the AI crystal-clear instructions. We will tell it its role, its task, and the exact format for the output. We are forcing it to return only valid JSON.

system_prompt = """

You are an expert data extraction AI. Your task is to analyze the user's text and extract key information into a structured JSON format.

The JSON object must contain the following fields:

- "customer_name": string

- "customer_email": string

- "order_number": string

- "product_name": string

- "order_date": string (in YYYY-MM-DD format)

- "sentiment": string (must be one of: "positive", "neutral", "negative")

If a piece of information is not present in the text, you must use a null value for that field.

Do not include any extra text, explanations, or apologies. Only return the valid JSON object.

"""See how specific that is? We’re leaving nothing to chance. We define the fields, the data types, and even the allowed values for `sentiment`.

Step 4: Make the API Call

Now we put it all together. We send the system prompt and our unstructured text to the Groq API. The most important part here is response_format={"type": "json_object"}. This is a special feature that forces the model to return perfectly structured JSON. It’s a game-changer.

chat_completion = client.chat.completions.create(

messages=[

{

"role": "system",

"content": system_prompt,

},

{

"role": "user",

"content": unstructured_text,

}

],

model="llama3-70b-8192",

temperature=0,

response_format={"type": "json_object"},

)

# Print the result

print(chat_completion.choices[0].message.content)

temperature=0 tells the AI not to get creative. We want predictable, deterministic output.

Complete Automation Example

Let’s put it all together in one file. Copy this, paste it into your extractor.py file, add your API key, and run it from your terminal with python extractor.py.

import os

import json

from groq import Groq

# --- 1. SETUP ---

# Replace with your actual Groq API key

client = Groq(

api_key="YOUR_GROQ_API_KEY",

)

# --- 2. YOUR MESSY INPUT TEXT ---

unstructured_text = """

Hi support team,

I'm writing because my recent order, #G123-89B, hasn't shipped yet.

I ordered the 'Quantum Widget Pro' on May 15th, 2024.

I'm getting pretty frustrated because I needed it for a project this week.

Thanks,

Jane Doe

jane.doe@example.com

"""

# --- 3. THE MAGIC PROMPT (YOUR INSTRUCTIONS) ---

system_prompt = """

You are an expert data extraction AI. Your task is to analyze the user's text and extract key information into a structured JSON format.

The JSON object must contain the following fields:

- "customer_name": string

- "customer_email": string

- "order_number": string

- "product_name": string

- "order_date": string (in YYYY-MM-DD format)

- "sentiment": string (must be one of: "positive", "neutral", "negative")

If a piece of information is not present in the text, you must use a null value for that field.

Do not include any extra text, explanations, or apologies. Only return the valid JSON object.

"""

# --- 4. THE API CALL ---

try:

chat_completion = client.chat.completions.create(

messages=[

{

"role": "system",

"content": system_prompt,

},

{

"role": "user",

"content": unstructured_text,

}

],

model="llama3-70b-8192",

temperature=0,

response_format={"type": "json_object"},

)

# --- 5. THE CLEAN OUTPUT ---

# The result is a string, so we parse it into a Python dictionary

extracted_data = json.loads(chat_completion.choices[0].message.content)

# Pretty-print the JSON to the console

print(json.dumps(extracted_data, indent=2))

except Exception as e:

print(f"An error occurred: {e}")

When you run this, you’ll see this beautiful, clean JSON printed to your screen almost instantly:

{

"customer_name": "Jane Doe",

"customer_email": "jane.doe@example.com",

"order_number": "G123-89B",

"product_name": "Quantum Widget Pro",

"order_date": "2024-05-15",

"sentiment": "negative"

}

Boom. Perfect data. Every time. In milliseconds. Take that, Chad.

Real Business Use Cases

You can point this exact same automation at any text-based problem. Just change the `unstructured_text` and the `system_prompt`.

- Real Estate Agency: Feed it emails from your Zillow contact form. Extract `name`, `phone`, `property_address`, `is_buyer_or_seller`, and `timeline`. Pipe the clean JSON directly into your CRM to create a new lead.

- E-commerce Store: Scrape all the reviews for your products. Extract `product_sku`, `star_rating`, `key_features_mentioned`, and a `review_summary`. Analyze the results to find your most-loved features and common complaints.

- Recruiting Firm: Feed it resumes as raw text. Extract `candidate_name`, `email`, `phone_number`, `years_of_experience`, and `key_skills` (as a list). Use this to pre-fill your Applicant Tracking System (ATS).

- Financial Analyst: Process quarterly earnings call transcripts. Extract `revenue_reported`, `forward_looking_guidance`, `ceo_sentiment`, and `mentions_of_competitors`. Turn a 1-hour call into a structured summary in seconds.

- SaaS Company: Analyze user feedback from a feature request board. Extract `feature_requested`, `user_role` (e.g., ‘admin’, ‘editor’), `urgency_level`, and `business_impact`. Use this to automatically prioritize your product roadmap.

Common Mistakes & Gotchas

- Not Using `json_mode`: If you forget

response_format={"type": "json_object"}, the model might return something like “Sure, here is the JSON you requested: { … }”. This will break any downstream automation. Always force JSON output. - Vague Prompting: If you just say “extract the details,” the AI might invent field names (`cust_name` instead of `customer_name`) or use inconsistent formats. Be brutally specific in your system prompt. Your prompt is your contract with the AI.

- Ignoring Missing Data: Your text won’t always contain every field. If you don’t instruct the AI on how to handle missing info (e.g., “use null”), it might just leave the field out, which can cause errors.

- Choosing the Wrong Model: For simple extractions, a smaller, faster model like Llama3-8b is fine. For complex legal documents or nuanced sentiment, a more powerful model like Llama3-70b is worth it for the improved accuracy. Groq lets you switch easily.

How This Fits Into a Bigger Automation System

This script is a single, powerful gear in a much larger machine. It’s rarely the start or end of the process.

Think of it as the “Formatting Department” in your automation factory:

- The Input: The `unstructured_text` can come from anywhere. A webhook from your website, an email parsed by Make or Zapier, a new row in a Google Sheet, a transcription from a voice AI that listened to a customer call.

- The Output: The clean JSON it produces is now fuel for other systems. You can use it to:

- Create or update a record in a CRM (HubSpot, Salesforce).

- Add a row to a database (Airtable, Postgres).

- Trigger another AI agent (e.g., if `sentiment` is `negative`, trigger an “Urgent Response Agent”).

- Generate a summary for a Slack notification.

This skill—turning chaos into structure—is the fundamental building block of almost every advanced AI automation.

What to Learn Next

Okay, you’ve built a robot that can read and understand text with terrifying speed and accuracy. You’ve replaced the most tedious part of knowledge work.

So… what now?

Now that we have clean, structured data the moment it arrives, we can act on it automatically. What if we could not only extract the data from Jane’s email but also instantly use it to look up her order in our database and draft a personalized reply?

In the next lesson in this series, we’re going to do exactly that. We’ll take the JSON output from this script and feed it to a second AI agent—a “Tool-Using Agent”—that can connect to other systems to find information and take action. We’re going from a passive data clerk to an active, problem-solving robot employee.

You’ve built the eyes and ears. Next, we build the hands.

Stay sharp.

“,

“seo_tags”: “AI automation, Groq, Llama 3, structured data extraction, JSON, business automation, Python, API, no-code, low-code, AI for business”,

“suggested_category”: “AI Automation Courses