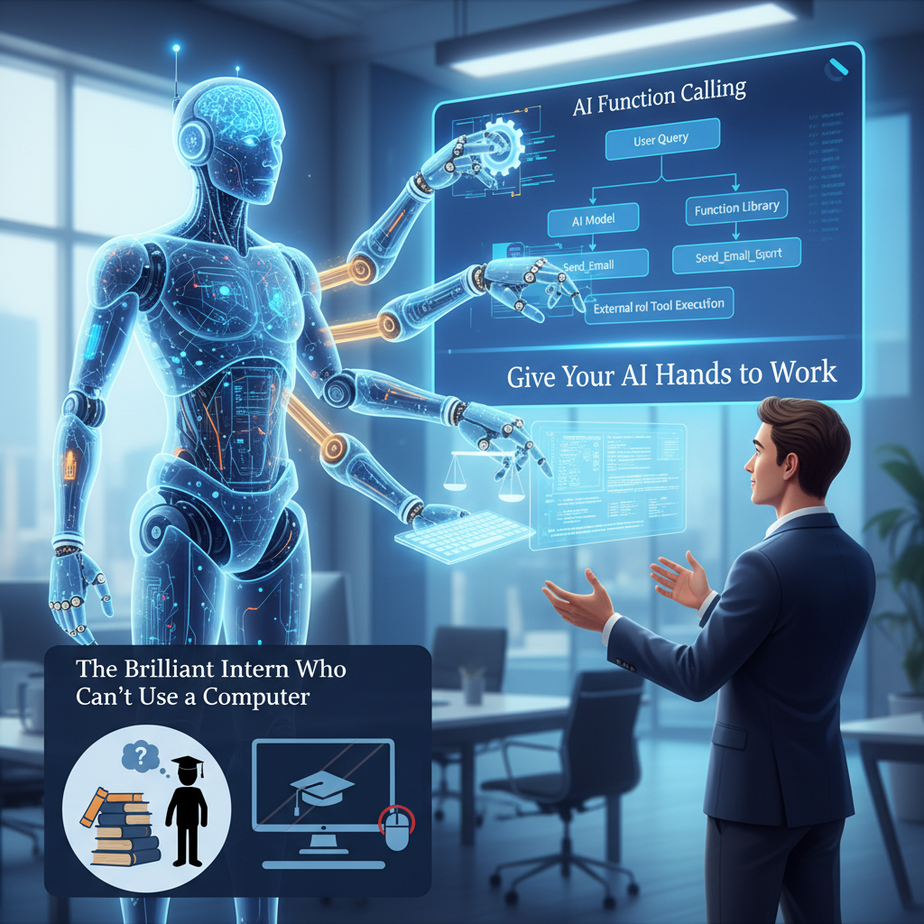

The Brilliant Intern Who Can’t Use a Computer

Imagine you hire a new intern. This intern, let’s call him Leo, is a genius. He’s read every book ever written. He can summarize complex legal documents in seconds, draft brilliant marketing copy, and speak seventeen languages.

There’s just one problem. Leo has no hands. He’s a brain in a jar.

You ask him, “Leo, what’s the current price of our main competitor’s stock?” He replies, “I can’t access real-time financial data, but based on my training data from last year, it was approximately $150 per share.” Useless.

You ask, “Can you check if customer order #4815 has shipped?” He says, “I do not have access to your company’s order management system. However, I can explain the general principles of logistics and supply chain management.” Also useless.

This is the state of most Large Language Models (LLMs) out of the box. They’re brilliant talkers, but they can’t *do* anything. They’re locked in a digital box, unable to interact with the real world. Today, we’re giving our AI hands. We’re bolting on a toolbelt.

Why This Matters

Function calling (or “tool use”) is arguably the most important concept in AI automation. It’s the bridge between conversation and action. It elevates your AI from a chatbot to a “do-bot.”

- Automate Real Work: Instead of just answering questions, an AI can now directly interact with your business systems. It can query a database, update a CRM, send an email, or check inventory levels.

- Create Interactive Tools: Build agents that respond to natural language commands. “Book a meeting with Sarah for next Tuesday at 3 PM.” The AI understands the intent, identifies the people and time, and then calls your calendar API to create the event.

- Build Autonomous Agents: This is the foundation for agents that can perform multi-step tasks. An agent can first look up a customer’s info in the CRM, then check their recent orders in your e-commerce platform, and finally draft a personalized follow-up email based on that data.

This is how we move from AI as a fancy search engine to AI as a tireless, infinitely scalable digital employee.

What This Tool / Workflow Actually Is

Function Calling is a mechanism that allows an LLM to request the execution of a specific function or tool in your code.

Think of it like this: your AI is the manager, and your Python code is the employee. The manager (AI) doesn’t know *how* to do the work, but it’s smart enough to know *when* a specific tool is needed and *what information* to give that tool.

The workflow is a two-step dance:

- Step 1 (AI Decides): You give the AI a prompt (e.g., “What’s the weather in San Francisco?”) and a list of available tools (e.g., a tool called `get_current_weather` that takes a `location` as input). The AI analyzes the prompt and, instead of answering directly, it replies with a structured message like: “I should call the `get_current_weather` function with the argument `location=’San Francisco’`.”

- Step 2 (You Execute): Your code receives this structured message. It then calls the *actual* `get_current_weather` function in your Python script. The function runs, gets the real weather data, and returns it (e.g., “65°F and foggy”). You then send this result *back* to the AI and say, “Okay, I ran the tool for you. Here’s the data.”

Finally, the AI uses this new data to generate a user-friendly response: “The current weather in San Francisco is 65°F and foggy.”

What it is NOT: The AI does not write or execute the code itself. You, the developer, are responsible for creating the tools and making them available. The AI just tells you which one to use.

Prerequisites

We’re connecting concepts now, so the list is growing, but it’s all manageable.

- Everything from the last lessons: A Groq account and API key, with Python installed. We’re sticking with Groq because speed is essential for this back-and-forth conversation with the AI.

- Basic Python Knowledge: You need to know what a function is (`def my_function():`) and how to call one.

- Basic JSON Knowledge: You need to understand the concept of key-value pairs (e.g., `{“location”: “Boston”}`). The AI uses JSON to tell us which tool to call.

Don’t be intimidated. The code is simple, and the concept is what matters. You can do this.

Step-by-Step Tutorial

Let’s build a simple agent that has two tools: one to get the weather and one to get a stock price.

Step 1: Define Your Python “Tools”

First, we create the actual functions our AI can use. These are just regular Python functions. For this example, they’ll just return fake data, but in a real-world scenario, they would make real API calls.

Create a file called agent_with_tools.py and add this to the top:

import json

def get_current_weather(location):

"""Get the current weather in a given location"""

print(f"--- Calling Tool: get_current_weather(location={location}) ---")

# In a real app, you'd make an API call here.

if "tokyo" in location.lower():

return json.dumps({"location": "Tokyo", "temperature": "10", "unit": "celsius"})

elif "san francisco" in location.lower():

return json.dumps({"location": "San Francisco", "temperature": "72", "unit": "fahrenheit"})

else:

return json.dumps({"location": location, "temperature": "unknown"})

def get_stock_price(symbol):

"""Get the current stock price for a given symbol"""

print(f"--- Calling Tool: get_stock_price(symbol={symbol}) ---")

# In a real app, you'd make an API call here.

if symbol.upper() == "GOOG":

return json.dumps({"symbol": "GOOG", "price": "175.45", "currency": "USD"})

elif symbol.upper() == "AAPL":

return json.dumps({"symbol": "AAPL", "price": "190.90", "currency": "USD"})

else:

return json.dumps({"symbol": symbol, "price": "unknown"})Step 2: Describe the Tools for the AI

This is the most critical part. We need to create a “manual” for our tools that the AI can understand. This manual is written in a specific format called JSON Schema. It tells the AI the tool’s name, what it does, and what parameters (arguments) it requires.

Add this list to your agent_with_tools.py file:

tools = [

{

"type": "function",

"function": {

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA",

},

},

"required": ["location"],

},

},

},

{

"type": "function",

"function": {

"name": "get_stock_price",

"description": "Get the current stock price for a given symbol",

"parameters": {

"type": "object",

"properties": {

"symbol": {

"type": "string",

"description": "The stock ticker symbol, e.g. GOOG or AAPL",

},

},

"required": ["symbol"],

},

},

}

]Step 3: Build the Main Agent Loop

Now we create the logic that handles the two-step dance. It sends the prompt and tools to Groq, gets the AI’s decision, runs the right tool, and sends the result back for the final answer.

Add the rest of the code to your file. Read the comments to understand the flow.

from groq import Groq

# Initialize Groq client

client = Groq()

def run_conversation(user_prompt):

print(f"User: {user_prompt}")

messages = [{"role": "user", "content": user_prompt}]

# First API call: Send the prompt and tool descriptions to the AI

response = client.chat.completions.create(

model="llama3-70b-8192",

messages=messages,

tools=tools,

tool_choice="auto",

)

response_message = response.choices[0].message

# Check if the AI wants to call a tool

if response_message.tool_calls:

# Append the AI's decision to our message history

messages.append(response_message)

# Find the available functions in our script

available_functions = {

"get_current_weather": get_current_weather,

"get_stock_price": get_stock_price,

}

# Execute each tool call the AI requested

for tool_call in response_message.tool_calls:

function_name = tool_call.function.name

function_to_call = available_functions[function_name]

function_args = json.loads(tool_call.function.arguments)

# Call the actual Python function

function_response = function_to_call(**function_args)

# Append the tool's output to the message history

messages.append(

{

"tool_call_id": tool_call.id,

"role": "tool",

"name": function_name,

"content": function_response,

}

)

# Second API call: Send the tool's result back to the AI for a final answer

final_response = client.chat.completions.create(

model="llama3-70b-8192",

messages=messages,

)

print(f"AI: {final_response.choices[0].message.content}")

else:

# If no tool is needed, just print the AI's direct response

print(f"AI: {response_message.content}")

# --- Run the agent with some examples ---

if __name__ == "__main__":

run_conversation("What is the weather like in Tokyo?")

print("\

---------------------\

")

run_conversation("What is the stock price for Google?")Step 4: Run It!

In your terminal, run the script:

python agent_with_tools.pyYou will see the output showing the whole process: the user prompt, the tool being called, and the final, natural-language answer from the AI. You’ve successfully given your AI hands!

Complete Automation Example

Let’s model a more complex business scenario: a customer service agent that can check an order status and process a refund.

Imagine these are your tools:

def get_order_status(order_id):

"""Looks up an order and returns its status."""

# Fake database lookup

if order_id == "A123":

return json.dumps({"status": "shipped", "eta": "2 days"})

return json.dumps({"status": "not_found"})

def process_refund(order_id, amount, reason):

"""Processes a refund for a given order ID."""

print(f"--- REFUNDING ${amount} for order {order_id} due to: {reason} ---")

return json.dumps({"refund_status": "success"})Now, a user types: “My order A123 is taking forever. I want to cancel it and get my money back. The total was $59.99.”

Our agent would perform a multi-step thought process:

- AI Brain: “The user mentioned an order ID. I should check its status first. I’ll call `get_order_status` with `order_id=’A123’`.”

- Your Code: Runs the function, which returns `{“status”: “shipped”, “eta”: “2 days”}`.

- AI Brain: “Okay, the order has shipped. The user also wants to cancel and get a refund for $59.99 because of a delay. I should now call `process_refund` with `order_id=’A123’`, `amount=59.99`, and `reason=’Customer dissatisfied with shipping time’`.”

- Your Code: Runs the refund function, which returns `{“refund_status”: “success”}`.

- Final AI Response: “I’ve checked on your order A123, and it has already shipped with an ETA of 2 days. However, I understand your frustration with the delay, so I have gone ahead and processed a full refund of $59.99 for you. You can expect to see that in 3-5 business days.”

This is not just a conversation. This is a problem being solved, a customer being helped, and a business process being automated, all driven by natural language.

Real Business Use Cases

- Sales Automation: An agent that monitors incoming emails. If an email contains a purchase inquiry, the agent uses a `search_crm` tool to see if the person is an existing customer, then uses a `draft_email` tool to create a personalized response, and finally uses a `schedule_meeting` tool to book a demo.

- IT Helpdesk: An employee uses a Slack bot to say, “I can’t access the marketing drive.” The agent uses a `check_user_permissions` tool, identifies a mismatch, and then uses a `grant_access` tool to fix the problem, all without a human IT admin.

- Data Analysis: An analyst asks, “What were our top 5 selling products last month?” The AI agent uses a `query_database` tool with a SQL query it generates itself, gets the raw data back, and then summarizes it for the analyst.

- Travel Concierge: A voice agent (using our skills from the last lesson!) that can `search_flights`, `check_hotel_prices`, and `book_rental_car`. The user just has to say, “Find me a flight to Miami next Friday and a hotel near the beach.”

- DevOps Management: A senior engineer can tell a bot, “Restart the web server on cluster B.” The agent authenticates, identifies the correct server, and calls a secure `execute_cli_command` tool to perform the restart safely.

Common Mistakes & Gotchas

- Vague Tool Descriptions: The AI relies *entirely* on your descriptions. If your description for `get_stock_price` is just “Gets data,” the AI will have no idea when to use it. Be specific: “Gets the current stock price for a given stock ticker symbol.”

- Giving the AI Too Much Power: Never, ever give an AI a tool that can do something destructive without a human-in-the-loop confirmation step. A tool like `delete_all_users` is a recipe for disaster. Start with read-only tools first.

- Ignoring Errors: What if your internal API is down? Your tool should `return` an error message. The AI is smart enough to see that error and reply to the user, “I’m sorry, I’m unable to access the order system right now. Please try again in a few minutes.”

- Complex, Monolithic Functions: Don’t create one giant tool that does ten things. Create ten small, specific tools. This makes it much easier for the AI to reason about which one to use.

How This Fits Into a Bigger Automation System

Function calling is the engine of modern AI automation. It’s the piece that connects the brain to the rest of the body.

- Voice Agents: When you combine this lesson with the last, you get a voice assistant you can command to perform real actions, not just answer trivia. This is how you build a real-life Jarvis.

- Multi-Agent Workflows: In a system with multiple agents (e.g., a Research Agent and a Writing Agent), function calling is how they pass tasks to each other. The manager agent might call a `delegate_research_task` tool, which activates the research agent.

- RAG Systems: A Retrieval-Augmented Generation system finds relevant documents. But what if one of those documents contains a command? Function calling allows the agent to not just read the document, but to *act* on the information it contains.

Essentially, any time you want your AI to interact with another piece of software — a CRM, an email platform, a database, an internal API — function calling is the mechanism you will use.

What to Learn Next

Let’s recap our journey. We built an AI brain that thinks at lightning speed. We gave it a voice and ears to have real-time conversations. Today, we gave it hands to use tools and interact with the world.

Our agent is now incredibly powerful, but it’s still reactive. It waits for our command. It’s an intern, waiting for instructions.

In the next lesson, we take the final step. We’re cutting the puppet strings. We will learn how to build autonomous agents that can operate on their own. We will give our agent a goal, a set of tools, and the ability to think step-by-step, deciding for itself which tool to use next to achieve its objective. We’re moving from building assistants to building workers.

Get ready. This is where it gets really interesting. See you in the next lesson.

“,

“seo_tags”: “ai function calling, tool use ai, groq tutorial, python, ai agents, business automation, llm, api integration, autonomous agents”,

“suggested_category”: “AI Automation Courses