The Intern Who Typed One Letter at a Time

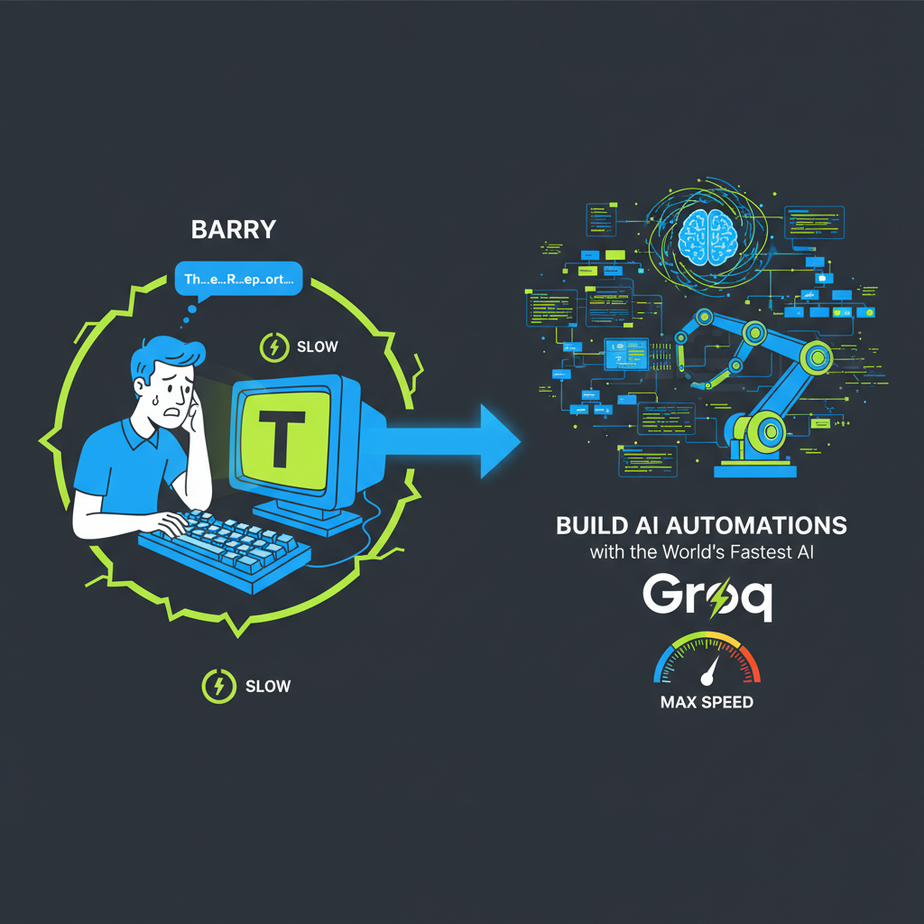

I once had an intern. Let’s call him Barry. I asked Barry to summarize a 10-page report. He sat down, cracked his knuckles, and started typing. The summary was… fine. But watching him work was painful. He would type one letter, pause, think, type another, backspace three times, and then stare into space for a full minute.

Most of the AI tools you’ve used feel like Barry. You ask a question, and the chatbot dutifully starts “thinking,” printing its answer word… by… agonizing… word. You can almost feel the silicon sweating. For simple chats, it’s a minor annoyance. For a business automation that needs to process 1,000 items an hour? It’s a catastrophic failure.

Speed isn’t a luxury in automation; it’s the entire point. What good is an AI-powered robot if it moves slower than the human it replaced? Today, we’re firing Barry and hiring a new intern who thinks at the speed of light. Let’s talk about Groq.

Why This Matters

In the world of AI, there are two speeds: “pretty cool” and “business critical.”

“Pretty cool” is asking an AI to write a poem and watching it appear over 15 seconds. “Business critical” is when a customer is on the phone and your AI sales agent needs to analyze their question, look up a product in a database, and formulate a response before the customer hangs up.

Latency—the delay between a request and a response—is the silent killer of AI automation. It’s the difference between a real-time sentiment analysis tool that helps a support agent *during* a call, and one that sends them a summary report an hour later. It’s the difference between an interactive coding assistant and a glorified search engine.

Mastering a high-speed inference engine like Groq means you can build systems that were impossible six months ago. You’re not just making things faster; you’re unlocking entirely new categories of automation that rely on instantaneous intelligence.

What This Tool / Workflow Actually Is

Let’s be crystal clear. Groq is not a new Large Language Model (LLM) like GPT-4 or Llama 3. It’s not a new brain.

Groq is a new *engine*.

They built a completely new kind of chip called an LPU (Language Processing Unit) designed to do one thing: run existing, open-source LLMs at absolutely ludicrous speeds. Think of it like this: Llama 3 is a brilliant race car driver. A normal server with GPUs is a standard race track. Groq is a track made of pure lightning that has no speed limit.

What it does: It takes requests for models like Llama 3 or Mixtral and returns the full response hundreds of tokens per second faster than almost anyone else.

What it does NOT do: It doesn’t create better models. The quality of the answer you get is determined by the underlying model (e.g., Llama 3). Groq just delivers that answer faster than you can blink.

Prerequisites

This is where people get nervous. Don’t. If you can order a pizza online, you can do this.

- A Groq Cloud Account: It’s free to get started. Go to their website, sign up, and you’ll get access to an API key. This is your secret password to the fast engine.

- Basic Python Setup: We’re talking absolute basics. If you don’t have Python, a quick Google search for “Install Python on [Your OS]” will get you there. We only need to install one small library.

- The Ability to Copy and Paste: I will give you every line of code. Your only job is to copy it, paste it, and run it. No deep programming knowledge required today.

That’s it. No credit card, no server administration, no machine learning degree.

Step-by-Step Tutorial

Let’s get our hands dirty. In five minutes, you’ll have this running.

Step 1: Get Your Groq API Key

First, head over to GroqCloud. Sign up, navigate to the “API Keys” section, and click “Create API Key.” Give it a name like “MyFirstAutomation” and copy the key it gives you. Guard this key like it’s your house key. Don’t share it or post it publicly.

Step 2: Set Up Your Python Project

Open up your terminal or command prompt. We need to install the official Groq Python library.

pip install groqThat’s it. You just installed the toolkit. Now create a new file named fast_bot.py and open it in a simple text editor.

Step 3: Write Your First Super-Fast Script

Paste the following code into your fast_bot.py file. Replace `”YOUR_API_KEY”` with the actual key you copied in Step 1.

import os

from groq import Groq

# Make sure to set your API key as an environment variable

# or paste it directly here for this simple example.

client = Groq(

api_key="YOUR_API_KEY",

)

chat_completion = client.chat.completions.create(

messages=[

{

"role": "user",

"content": "Explain the importance of low-latency AI in business automation.",

}

],

model="llama3-8b-8192",

)

print(chat_completion.choices[0].message.content)Step 4: Run the Script

Go back to your terminal, make sure you’re in the same directory as your file, and run it:

python fast_bot.pyBlink. Did you miss it? The entire answer should have appeared almost instantly. There was no slow, word-by-word typing. Just a block of text, delivered now. Welcome to the new standard.

Complete Automation Example

Okay, a simple script is fun, but let’s build a mini-automation. Imagine you run an online store and customer emails flood your inbox. You need to instantly sort them into “Urgent Support,” “Sales Inquiry,” or “General Feedback.” A human intern (Barry) would take all day. Our Groq-bot will do it in milliseconds.

Here’s the code. We’ll simulate a new email and have Groq classify it.

import os

from groq import Groq

# --- Your Settings ---

GROQ_API_KEY = "YOUR_API_KEY"

# --- The Simulated Incoming Email ---

incoming_email = """

Subject: My order #12345 hasn't arrived!

Hi there,

I ordered a product last week and the tracking says it was delivered, but I never received it. Can you please help me figure out where it is?

Thanks,

Jane Doe

"""

# --- The Automation Logic ---

client = Groq(api_key=GROQ_API_KEY)

def classify_email(email_content):

print("New email received. Classifying...")

system_prompt = "You are an email classification bot. Categorize the following email into one of three categories: Urgent Support, Sales Inquiry, or General Feedback. Respond with ONLY the category name and nothing else."

try:

chat_completion = client.chat.completions.create(

messages=[

{

"role": "system",

"content": system_prompt

},

{

"role": "user",

"content": email_content

}

],

model="llama3-8b-8192",

temperature=0.0, # We want deterministic output

max_tokens=10

)

classification = chat_completion.choices[0].message.content.strip()

print(f"-> Classification Result: {classification}")

return classification

except Exception as e:

print(f"An error occurred: {e}")

return "Error"

# --- Run the Automation ---

classify_email(incoming_email)When you run this, it will instantly print: -> Classification Result: Urgent Support. Imagine this script connected to your email server. Every single incoming email could be tagged and routed to the correct department before a human even sees it. That’s a real, scalable business process.

Real Business Use Cases

- E-commerce Chatbots: A customer asks, “Do you have this shirt in blue?” A slow bot takes 5 seconds to reply. A Groq-powered bot checks inventory and responds instantly, preventing the customer from getting bored and leaving your site.

- Financial Data Analysis: A trading firm needs to analyze news articles for sentiment in real-time. Groq can process thousands of articles a minute, allowing an algorithm to make trades based on breaking news faster than any human competitor.

- Real-Time Voice Agents: This is the holy grail. The main reason AI phone agents sound robotic is the delay. Groq’s low latency allows for a fluid, natural conversation, making it possible to build AI receptionists or support agents that don’t drive customers crazy.

- Content Moderation: A social media platform needs to scan user-generated comments for hate speech instantly. Groq can classify text as it’s submitted, blocking harmful content before it ever goes public.

- Interactive Tutoring Systems: An AI tutor for students needs to provide instant feedback on their answers. With Groq, a student can answer a math problem and get a detailed explanation of their mistake immediately, keeping them engaged and learning.

Common Mistakes & Gotchas

- Blaming Groq for Bad Answers: Remember, Groq is the engine, not the driver. If you get a low-quality or incorrect answer, that’s the fault of the model (e.g., Llama 3). Your prompt engineering is still the most important factor for quality.

- Choosing the Wrong Model: Groq offers several models. For simple tasks like our classification example, a smaller, faster model like `llama3-8b-8192` is perfect. For complex reasoning, you might need a larger one. Don’t use a sledgehammer to crack a nut.

- Not Handling API Errors: What if the Groq service is temporarily down? Your script will crash. Real-world code needs `try…except` blocks (like in our complete example) to handle these failures gracefully.

- Ignoring Streaming for UIs: Even though the *total* response time is tiny, if you’re building a user-facing chatbot, you should still stream the response. It feels more natural to see the text appear progressively, even if it’s happening at lightning speed.

How This Fits Into a Bigger Automation System

What we built today is a single gear. A powerful gear, but still just one piece. The real magic happens when you connect it to the rest of the factory.

- CRM Integration: Connect our email classifier to your CRM (like HubSpot or Salesforce). When a “Sales Inquiry” is detected, the system can automatically create a new lead, assign it to a salesperson, and add the email content as a note.

- Voice Agents: This is the big one. Combine Groq with a Text-to-Speech (TTS) and Speech-to-Text (STT) service. The STT transcribes the user’s spoken words, Groq generates the reply instantly, and the TTS converts that text back into audio. This three-part pipeline, when fast enough, creates a believable conversational AI.

- Multi-Agent Workflows: Imagine an AI team. A “Dispatcher” agent gets a complex task. It uses Groq to instantly break the task down and assign sub-tasks to specialized agents (a “Research” agent, a “Writing” agent, etc.). The sheer speed means this coordination happens in milliseconds, not minutes.

- RAG Systems: When you need to answer questions based on your company’s private documents (Retrieval-Augmented Generation), Groq can take the context found by the retrieval system and synthesize an answer instantly. No more waiting for your internal knowledge-base bot to reply.

What to Learn Next

You’ve just installed a fusion reactor in your automation workshop. You have access to nearly unlimited, instantaneous intelligence. The bottleneck is no longer the AI’s thinking speed; it’s how fast you can get data in and out of it.

So, what’s next? We need to connect this reactor to something that can *act* in the real world.

In the next lesson of this course, we’re going to do exactly that. We’ll take our Groq-powered brain and plug it into a telephony system to create a basic AI agent that can answer a phone call, understand the caller’s intent, and provide a real-time response. You’ll see firsthand why latency is the only thing that matters in conversational AI.

You’ve mastered speed. Now it’s time to give it a voice.

“,

“seo_tags”: “groq, ai automation, python, low-latency ai, llm, llama 3, business automation, tutorial, api”,

“suggested_category”: “AI Automation Courses