The Intern Who Couldn’t Follow Instructions

Picture this. You hire a new intern. They’re brilliant. They’ve read every book ever written, speak a dozen languages, and can write a Shakespearean sonnet about your quarterly earnings report. So, you give them their first task.

“Hey, could you check the weather in London for me?”

You expect them to go to a weather website and come back with “15 degrees and cloudy.”

Instead, they return with a five-page essay on the meteorological effects of the North Atlantic Oscillation, the cultural significance of fog in Victorian literature, and a detailed history of the trench coat. They never actually checked the weather.

That brilliant-but-useless intern is a Large Language Model (LLM) like GPT-4 by default. It can talk, but it can’t *do* anything. It has no hands. It can’t click buttons, check databases, or use external tools.

Today, we’re giving our AI intern hands. This technique is called Function Calling, and it’s the single most important skill for moving from toy chatbots to real business automation.

Why This Matters

A business runs on actions, not just conversations. You need to check inventory, update a CRM, book a meeting, or reset a password. An AI that can only talk is a novelty. An AI that can trigger actions is a workforce.

Function calling is the bridge between a user’s messy, conversational request (“uh, I think I need to find that new client’s contact info”) and a perfectly structured, machine-readable command (`get_contact_info(client_name=”ACME Corp”)`).

This workflow replaces the human in the middle. Instead of a support agent listening to a customer, then manually looking up their order in another system, the AI can structure the request so your code can handle it automatically. This is how you scale. This is how you build systems that make money while you sleep.

What This Tool / Workflow Actually Is

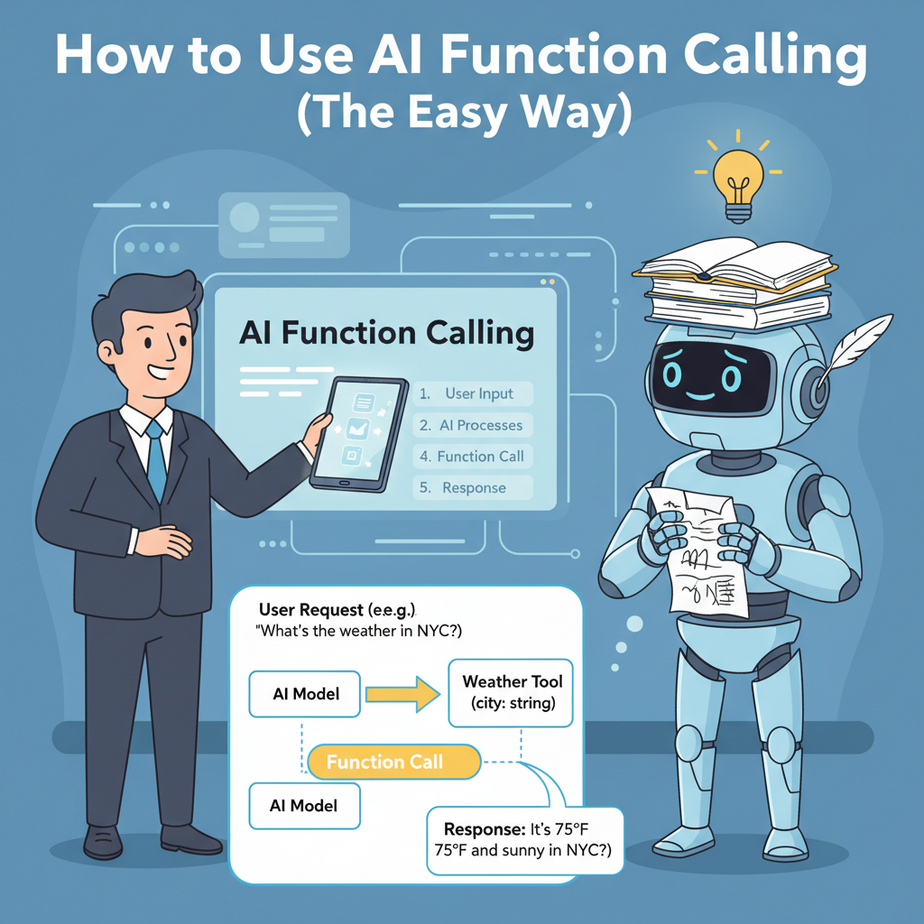

Function calling is a feature of modern LLM APIs (like OpenAI’s, Google’s, etc.) that allows the model to signal when it wants to use an external tool.

What it does:

It takes a user’s query (e.g., “What’s the stock price for Apple?”) and, if it matches a tool you’ve described, it outputs a structured piece of JSON, like {"function_name": "get_stock_price", "arguments": {"ticker_symbol": "AAPL"}}.

What it does NOT do (CRITICAL):

It does not run any code. This is the biggest, most dangerous misconception. The AI doesn’t execute the `get_stock_price` function. It just hands you a perfectly formatted request slip. Your own code is responsible for taking that request, running the *actual* function, and getting the result. The AI is a translator, not an executor.

Think of it as a factory. The AI is the manager who takes a jumbled order from a customer and writes a clear, standardized work ticket. The ticket then goes to the assembly line (your code) to actually build the product.

Prerequisites

This is easier than it sounds. I promise.

- An OpenAI API Key. Go to

platform.openai.com, sign up, go to the API Keys section, and create a new secret key. Save it somewhere safe. Yes, it costs a tiny amount of money to use, but we’re talking fractions of a penny for this tutorial. - Python installed on your computer. If you don’t have it, just search for “Install Python” and follow the instructions for your operating system.

- The OpenAI Python library. Open your terminal or command prompt and run this one command:

pip install openaiThat’s it. If you can copy-paste, you can do this.

Step-by-Step Tutorial

We’re going to build a simple system that lets an AI check the weather. We won’t connect to a real weather API; we’ll just fake it. The principle is exactly the same.

Step 1: Define Your Function in Python

First, let’s write the actual tool we want the AI to use. This is just a plain old Python function. It takes a `location` and returns some fake weather data.

import json

def get_current_weather(location):

"""Get the current weather in a given location"""

# In a real app, you'd call a real weather API here.

# For this example, we'll return mock data.

if "tokyo" in location.lower():

return json.dumps({"location": "Tokyo", "temperature": "15", "unit": "celsius"})

elif "san francisco" in location.lower():

return json.dumps({"location": "San Francisco", "temperature": "72", "unit": "fahrenheit"})

else:

return json.dumps({"location": location, "temperature": "unknown"})

Why? This is our “real world” tool. The AI can’t run this code itself, but this is the action we want to enable.

Step 2: Describe the Function to the AI

Now, we have to create an “instruction manual” for the AI. This manual is written in a specific JSON format. It tells the AI the tool’s name, what it’s for, and what information it needs (its parameters).

tools = [

{

"type": "function",

"function": {

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA",

},

},

"required": ["location"],

},

}

}

]

Why? This is the most important step. If your `description` is vague, the AI won’t know when to use the tool. If your `parameters` are wrong, it won’t know what information to ask for. Be crystal clear.

Step 3: Make the First API Call

Now we send our user’s question and the tool manual to OpenAI. We tell it what the user said and what tools it has available.

from openai import OpenAI

import os

# It's best practice to set your key as an environment variable

# client = OpenAI(api_key=os.environ.get("OPENAI_API_KEY"))

# But for this simple script, you can also hardcode it (don't do this in production!)

client = OpenAI(api_key="YOUR_API_KEY_HERE")

messages = [{"role": "user", "content": "What's the weather like in San Francisco?"}]

response = client.chat.completions.create(

model="gpt-4o", # Or any model that supports function calling

messages=messages,

tools=tools,

tool_choice="auto", # Let the model decide whether to call a function

)

response_message = response.choices[0].message

Why? We’re not asking for a chat response. We’re asking the model to either chat *or* tell us if it wants to use a tool. The `tools` parameter is how we pass it the instruction manual.

Step 4: Check if the AI Wants to Use a Tool

Let’s inspect the response. If the AI decided to use our tool, the response will contain a `tool_calls` object. If not, it will be `None`.

tool_calls = response_message.tool_calls

if tool_calls:

print("The AI wants to call a function!")

# The AI has not executed anything yet. It just gave us the request.

print(tool_calls)

else:

print("The AI responded with a regular message.")

print(response_message.content)

Why? Your code needs a fork in the road. If there are tool calls, proceed to the next step. If not, just display the chat message. This logic is essential for building a robust agent.

Step 5: Execute the Function Yourself

If `tool_calls` exists, we need to loop through them (the AI could request multiple), get the function name and arguments, and run our *actual* Python function.

# This part only runs if tool_calls is not None

available_functions = {

"get_current_weather": get_current_weather,

}

# Grab the first tool call (for simplicity)

first_tool_call = tool_calls[0]

function_name = first_tool_call.function.name

function_to_call = available_functions[function_name]

function_args = json.loads(first_tool_call.function.arguments)

# Call the actual Python function with the arguments provided by the AI

function_response = function_to_call(

location=function_args.get("location")

)

print(f"Our Python function returned: {function_response}")

Why? This is where the action happens! We’re using the structured data from the AI to run real code. Notice the `available_functions` dictionary—this is a safe way to map the AI’s requested function name to the actual code you want to run.

Step 6: Send the Result Back to the AI

The AI is still waiting. It asked a question (“can I run the weather tool?”) and we have the answer. We need to go back to the API and give it that answer. This “closes the loop.”

# Append the AI's previous message to our message history

messages.append(response_message)

# Append the function's response to our message history

messages.append(

{

"tool_call_id": first_tool_call.id,

"role": "tool",

"name": function_name,

"content": function_response,

}

)

# Make a second API call with the complete conversation history

second_response = client.chat.completions.create(

model="gpt-4o",

messages=messages,

)

print("\

Final AI Response:")

print(second_response.choices[0].message.content)

Why? The AI has no memory. By sending the entire conversation history back, including its own request and our tool’s result, we give it the context it needs to provide a final, helpful, natural-language answer.

Complete Automation Example

Here is the full, copy-paste-ready script. Just replace `”YOUR_API_KEY_HERE”` with your actual key.

import json

from openai import OpenAI

# --- 1. Define your real-world function ---

def get_current_weather(location):

"""Get the current weather in a given location"""

print(f"Calling get_current_weather for {location}")

if "tokyo" in location.lower():

return json.dumps({"location": "Tokyo", "temperature": "15", "unit": "celsius"})

elif "san francisco" in location.lower():

return json.dumps({"location": "San Francisco", "temperature": "72", "unit": "fahrenheit"})

else:

return json.dumps({"location": location, "temperature": "unknown"})

# --- Main execution logic ---

def run_conversation():

# --- 2. Describe your function to the AI ---

tools = [

{

"type": "function",

"function": {

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA",

},

},

"required": ["location"],

},

}

}

]

client = OpenAI(api_key="YOUR_API_KEY_HERE")

messages = [{"role": "user", "content": "What's the weather like in Tokyo?"}]

# --- 3. Make the first API call ---

response = client.chat.completions.create(

model="gpt-4o",

messages=messages,

tools=tools,

tool_choice="auto",

)

response_message = response.choices[0].message

messages.append(response_message) # Append the assistant's message

# --- 4. Check if the AI wants to use a tool ---

tool_calls = response_message.tool_calls

if tool_calls:

available_functions = {

"get_current_weather": get_current_weather,

}

for tool_call in tool_calls:

# --- 5. Execute the function ---

function_name = tool_call.function.name

function_to_call = available_functions[function_name]

function_args = json.loads(tool_call.function.arguments)

function_response = function_to_call(location=function_args.get("location"))

# --- 6. Send the result back to the AI ---

messages.append(

{

"tool_call_id": tool_call.id,

"role": "tool",

"name": function_name,

"content": function_response,

}

)

# Make the second API call with the tool's result

second_response = client.chat.completions.create(

model="gpt-4o",

messages=messages,

)

print(second_response.choices[0].message.content)

# Run the conversation

run_conversation()

When you run this, you’ll see the final output: “The current weather in Tokyo is 15°C.” Success! The AI used your tool to answer the question.

Real Business Use Cases

This isn’t just for weather bots. This is the pattern for almost all serious AI automation.

- E-commerce Support Bot: A customer asks, “Do you have the blue running shoes in size 10?” The AI calls your function `check_inventory(product_sku=”RUN-BL-10″)` which queries your Shopify or database API.

- SaaS Onboarding Assistant: A new user types, “How do I add a teammate to my project?” The AI understands the intent and calls `add_user_to_project(email=”new_user@example.com”, project_id=”abc-123″)`.

- Internal CRM Tool: A sales manager asks a Slack bot, “What’s the status of the Globex Corporation deal?” The AI calls `get_deal_status(account_name=”Globex Corporation”)` which pulls data from Salesforce.

- Travel Booking Agent: A user says, “Find me a hotel in Paris for next weekend under $300.” The AI structures this into `search_hotels(city=”Paris”, checkin_date=”YYYY-MM-DD”, checkout_date=”YYYY-MM-DD”, max_price=”300″)`.

- Content Management System: A marketer tells the system, “Draft a blog post about our new product launch.” The AI calls `create_draft_post(title=”New Product Launch”, tags=[“product”, “announcement”])`.

The pattern is always the same: messy human language in, structured function call out.

Common Mistakes & Gotchas

- Writing Lazy Descriptions: The most common failure point. If your function description is `”gets weather”`, the AI will get confused. If it’s `”Get the current weather forecast for a specified city”`, it will work every time. Be specific.

- Forgetting to Handle Errors: What if your `get_current_weather` function fails because the external API is down? Your code needs to catch that error and return a sensible message to the AI, like `{“error”: “Weather service is currently unavailable”}`. Then the AI can tell the user gracefully.

- Assuming the AI Will Always Call a Function: If the user just says “hello,” the `tool_calls` object will be `None`. Your code must handle this and just continue the conversation. Don’t build a system that breaks if a tool isn’t used.

- Security: Never, ever, let the AI’s output be used to run arbitrary code. Our `available_functions` dictionary is a simple security layer—it’s a whitelist of approved functions. If the AI hallucinates and tries to call a function named `delete_database`, our code will fail safely because that key won’t exist in our dictionary.

How This Fits Into a Bigger Automation System

Congratulations. You just learned the fundamental building block of modern AI agents. This isn’t just one cool trick; it’s the LEGO brick you’ll use to build castles.

- Voice Agents: A voice system like Twilio transcribes speech to text, sends it to your function-calling script, your script executes a tool, the final AI response is generated, and a text-to-speech service reads it back. You just built the brain for an AI phone agent.

- RAG (Retrieval-Augmented Generation): What if the user asks a question about your internal company documents? You can create a function called `search_documents(query=”…”)`. The AI will call that function, your code will search a vector database, and the results will be fed back into the second API call. This is how you give an AI knowledge about *your* world.

- Multi-Agent Workflows: You can have a “dispatcher” AI agent whose only job is to route tasks. A user request comes in, and the dispatcher’s only tool is `route_task(agent_name=”sales” or “support” or “billing”)`. This calls another, more specialized AI agent. This is how you build complex, company-wide automation.

What to Learn Next

You’ve given your AI hands. It can now interact with the world through the tools you provide.

But it still has no long-term memory and knows nothing about your specific business data. It’s a powerful tool user, but it’s working in the dark.

In the next lesson in our AI Automation course, we’re going to give it a brain. We will build a simple Retrieval-Augmented Generation (RAG) system from scratch. You’ll learn how to take your own documents—PDFs, text files, anything—and turn them into a knowledge base your AI can search and use to answer questions with perfect accuracy. We’ll connect it directly to the function-calling skills you learned today.

You’ve mastered action. Next up is knowledge.

“,

“seo_tags”: “AI Automation, OpenAI API, Function Calling, Python Tutorial, Business Automation, LLM, GPT-4”,

“suggested_category”: “AI Automation Courses